October 18, 2024

The Kids Online Safety and Privacy Act would require some platforms to safeguard the mental and physical health of children and teens

In July 2024, the U.S. Senate voted in favor of advancing S. 2073: Kids Online Safety and Privacy Act (KOSPA). The legislation provides guidelines regarding a company's "duty of care" — a legal term referring to the moral or legal responsibility to prevent harm — to protect children and 13- to 17-year-old teens from harms on some online platforms and video games, messaging applications, and video streaming services (i.e., covered platforms). These harms are defined as:

- "Consistent with evidence-informed medical information, the following mental health disorders: anxiety, depression, eating disorders, substance use disorders, and suicidal behaviors"

- "Patterns of use that indicate or encourage addiction-like behaviors by minors"

- "Predatory, unfair, or deceptive marketing practices, or other financial harms"

While President Biden has urged Congress to pass the bill, the Senate will need to reconcile its KOSPA bill with the House Committee on Energy and Commerce's revised versions of the Children and Teens' Online Privacy Protection Act (COPPA 2.0) and the Kids Online Safety Act (KOSA) that address similar issues. If KOSPA remains intact through those negotiations and is signed into law, legislators will appoint a committee to provide further guidance on how to interpret KOSPA within 18 months of the law's passage. However, impacted companies can take the initiative to begin a programmatic approach towards achieving the goals now, which may include consulting with research experts in child development, user experience, and legal accountability.

Key takeaways

Under the most recent draft of KOSPA, covered platforms that provide services that are used or likely to be used by children and teens may be required to prioritize business, functional, and design requirements that address the developmental needs of such users. While designing platforms that are easy to use, compellingly consumable, and socially engaging may remain a high priority, the Federal Trade Commission (FTC) and legislators have signaled a need to focus on health-centric design requirements, particularly for minors.

Changes to the user interface and experience may need to satisfy harm-prevention requirements in ways that are clearly defined, operationalized, measured, and tracked. Additionally, oversight programs may be needed to inform updates to features based on the evolving horizon of potential new threats, while balancing a company's need to increase market share and profits.

Impact for clients

To comply with KOSPA as proposed, impacted platforms may need to:

- Understand the extent and methods of child and teen platform use, and if applicable, approach website and app design with a greater intent of safeguarding children's and teens' health

- Build trust, understanding, and agency among children, teens, and their caregivers in the context of algorithmically presented content by providing tools and information that are measurably clear, conspicuous, and easy to understand

- Maintain accountability via third-party research, auditing, and public reporting:

- Covered platforms may be required to use third-party research to understand how harm prevention features are performing on their platforms and how these features are perceived by experts (e.g., developmental psychologists) and end-users

- Moreover, such findings may need to be presented at least annually in a public report produced by the covered platform that leverages third-party audit inspections

What Can We Help You Solve?

Exponent human factors and health scientists study the physical, cognitive, and psychosocial development of children and teens to understand the potential impact of different products on their safety, mitigating risks with expert analysis of user interaction and design features.

Exponent UX

Tackle difficult design challenges with user experience research, strategy, and consulting.

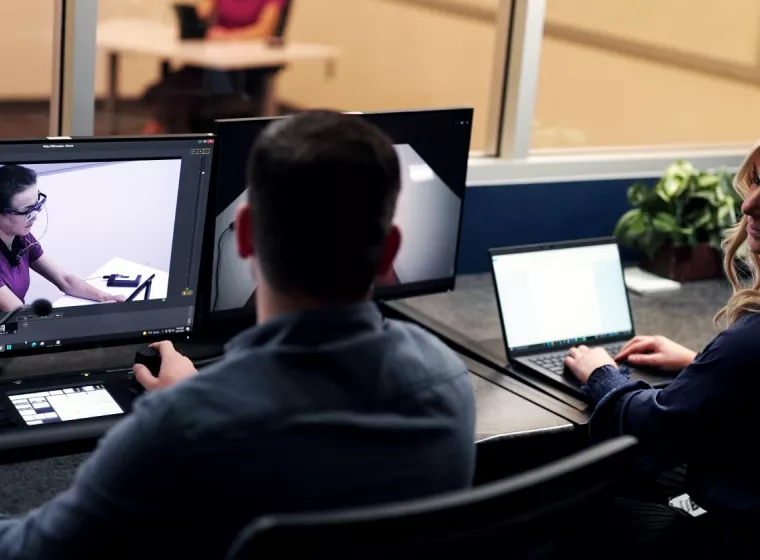

Usability Labs

Understand how your product is used in the real world with powerful qualitative and quantitative UX data.

Improve User Research & Testing

Human factors investigations for data-driven product and process design decisions.

Product Analysis & Improvement

We help you analyze, test, and improve products to meet modern safety and performance requirements.

Cutting-Edge User Research Testing & Evaluations

Pioneering scientific user experience research across the full lifecycle of consumer, industrial, automotive, and medical device products.

Insights